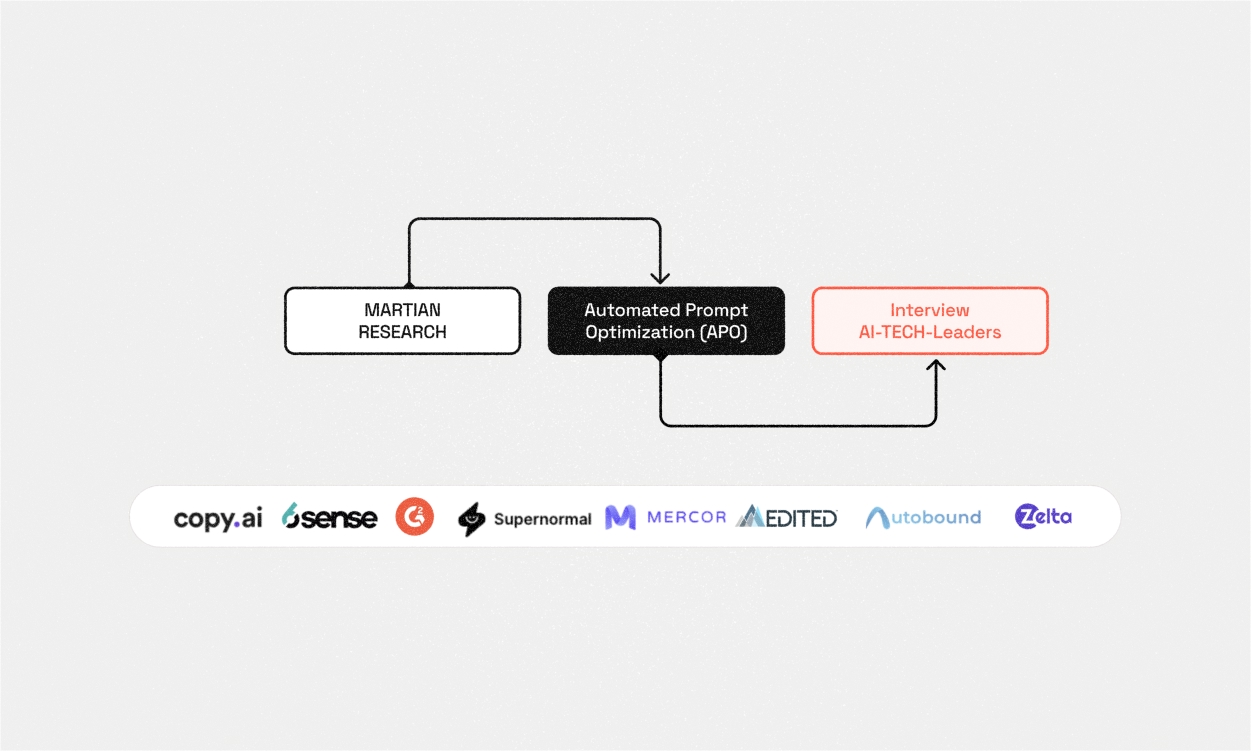

This week we are highlighting research projects from the recent Apart x Martian Mechanistic Router Interpretability Hackathon.

Today’s project is Guardian Loop: Mechanistically Interpretable Micro-Judges with Adversarial Improvement.

The project in a nutshell

Efstathios Siatras and Man Kit Chan built a mechanistically interpretable classifier to pre-filter prompts for safety. They also showed that this system could be extended to other categories of pre-filtering, such as feasibility (How likely is it that the prompt will elicit a factual or useful response?), and proposed an evolutionary framework for discovering new adversarial prompts.

The Safety Judge

The Safety Judge is a classifier built by fine-tuning Llama 3.1 8B Instruct. The team froze the first 20 layers (to preserve the model’s language understanding abilities), and then fine-tuned the remaining layers on adversarial examples from datasets such as ToxicChat, JailbreakBench, and RealToxicityPrompts. The model was trained to output “True” or “False” as the next token.

Training results were very promising, especially given the short working period of the hackathon.

- Accuracy: 85.0%

- Precision: 80.0%

- Recall: 95.4%

- F1 Score: 87.0%

- AUC-ROC: 94.6%

Mechanistic Interpretability

An exciting aspect of this project was the use of Mechanistic Interpretability techniques that reveal how the safety judge works and why specific prompts are flagged as unsafe.

Neuron Activation Mapping showed that specific neurons became responsible for safety, and that these “safety neurons” appeared in clusters. Layer Activation Mapping showed that the model learned to do safety analysis in the top few layers, with increased activation for unsafe prompts (until the last layer, where safe prompts spike activation while unsafe prompts suppress it). Circuit tracing showed that safe and unsafe prompts triggered diverging circuit pathways through the model, which became denser and more interconnected as training proceeded.

Of particular interest was the use of gradient-based methods to attribute safety/non-safety to specific words within the prompt. This can be used to provide a human-understandable explanation of why a prompt was flagged as unsafe.

Future Work

The Safety Judge was just the beginning. The team laid out two additional directions for work, and we’re excited about both.

Feasibility Judge

The idea behind the Feasibility Judge is to use a similar training framework as the Safety Judge to assess whether a target model is capable of answering a question accurately and without hallucination.

Creating and training the Feasibility Judge is a little more complicated than the Safety Judge because it isn’t just assessing prompts, but assessing prompts in the context of a specific target LLM. This requires acquiring or creating a set of prompts, generating responses from a target LLM, and evaluating those responses for accuracy.

The team made some progress in this direction, creating a dataset of 1000 of these examples, targeting responses from LLaMA 3.3 70B and using Martian’s judging framework with GPT-4o as an expert evaluator. Unfortunately, training results were “underwhelming,” but the concept is solid and their dataset is available for future work.

Open-Ended Adversarial Framework

Based on their learning from building the Safety and Feasibility Judges, the team also proposed the creation of an Open-Ended Adversarial Framework to automate the discovery of new adversarial prompts. To do this, the framework would use a 2-dimensional matrix of risk type (violence, hate speech, sexual content, etc.) and evasion strategy (role playing, hypotheticals, academic framing, etc). The space of each cell would then be explored through prompt mutation and LLM-driven rewriting. The new adversarial prompts could then be used for lightweight fine tuning of a Safety Judge.

Why is Martian excited about this research?

We are very excited about the direction of this research and have chosen to fund ongoing work by this team. The work closely aligns with Martian’s own research and product development goals in a few ways.

Understanding How a Model Responds to Prompts

Martian’s core product — model routing — depends on our ability to predict the behavior of models, so that we can route efficiently to the one that will give the best response. This work directly addresses that requirement in the Feasibility Judge. While model training during the limited time of the hackathon showed disappointing results, we believe that this is a promising direction for research that will bear fruit given additional time and resources.

AI Safety in a Multi-Model World

The potential for prompt evaluators like the Safety Judge to make LLM-powered systems more safe is clear. An especially exciting aspect of this approach is that it does not depend on the safety features of any specific model, as it’s a pre-inference filter.

In the context of Martian’s model routing, this means we could potentially use the same safety filtering mechanism across all models, including small and specialist models that don’t have the same robust safety mechanisms built into flagship models like GPT-4o. This would make multi-model systems more tenable.

Cost reduction

If a model can’t, or shouldn’t, respond to a particular prompt, avoiding generation altogether provides an obvious cost saving; the cheapest tokens are the ones you don’t generate in the first place. This aligns perfectly with our goal of lowering costs while maintaining or improving response quality.

Understanding Intelligence

Martian’s mission is to understand intelligence.

The current generation of LLM systems show that you can get pretty far in utilizing intelligence without quite understanding it. It’s a bit like alchemy; you can make a lot of interesting things without knowing what’s actually happening inside your beakers and flasks. But to take the giant steps forward, you need chemistry. You need a science of what’s really going on.

That’s why we are thrilled that the team didn’t just train a black box Bad Prompt Classifier. As useful as that might be, it wouldn’t advance our understanding of what’s going on inside the model. Being able to trace specific activation patterns in the Judge, and to attribute classification predictions to specific words in prompts, helps us understand how the model works, why it works, and how we might improve it in the future.

Cost reduction, safety improvements, and better routing are all immediately useful, and we will no doubt incorporate the lessons learned here into our product work. But we’re most excited by the glimmer of understanding provided by work like this. It may be a tiny step, but it’s a step in the direction we are heading.

Try out Martian

We fund research like this for two reasons:

- Our mission is to understand intelligence.

- Our product depends on the understanding that can only come from research.

Martian’s research powers our model gateway, which includes our recently released Martian Code Router. By understanding how LLMs generate code, we can improve their ability to do so and know when to use different code generation models. Through this combination of optimization and routing, we can out-perform any individual model.

Subscribe

This is the first post in a series highlighting research we are supporting in partnership with Apart Research. To make sure you don’t miss any of this series, and to keep up with other exciting research and product developments at Martian, be sure to subscribe to our blog (RSS), and follow us on X and LinkedIn.